Preface

The rapid advancement of generative AI models, such as DALL·E, industries are experiencing a revolution through automation, personalization, and enhanced creativity. However, this progress brings forth pressing ethical challenges such as bias reinforcement, privacy risks, and potential misuse.

A recent MIT Technology Review study in 2023, a vast majority of AI-driven companies have expressed concerns about ethical risks. This data signals a pressing demand for AI governance and regulation.

The Role of AI Ethics in Today’s World

AI ethics refers to the principles and frameworks governing the responsible development and deployment of AI. Without ethical safeguards, AI models may exacerbate biases, spread misinformation, and compromise privacy.

A recent Stanford AI ethics report found that some AI models perpetuate unfair biases based on race and gender, leading to discriminatory algorithmic outcomes. Addressing these ethical risks is crucial for creating a fair and transparent AI ecosystem.

How Bias Affects AI Outputs

A major issue with AI-generated content is bias. Because AI systems are trained on vast amounts of data, they often inherit and amplify biases.

The Alan Turing Institute’s latest findings revealed that many generative AI tools produce stereotypical visuals, such as associating certain professions with specific genders.

To mitigate these biases, organizations should conduct fairness audits, integrate ethical AI assessment tools, and establish AI accountability frameworks.

Deepfakes and Fake Content: A Growing Concern

AI technology has fueled the rise of deepfake misinformation, threatening the authenticity of digital content.

Amid the rise of deepfake scandals, AI-generated deepfakes were used to manipulate public opinion. According to a Pew Research Center survey, over half of the population fears AI’s role in misinformation.

To address this issue, businesses need to enforce content authentication measures, educate users on spotting deepfakes, and collaborate with policymakers to curb misinformation.

Protecting Privacy in AI Development

Data privacy remains a major ethical issue in AI. Training data for AI may Get started contain sensitive information, potentially exposing personal user details.

Research conducted by the European Commission found that 42% of generative AI companies lacked sufficient data safeguards.

To enhance privacy and compliance, companies should adhere to regulations like GDPR, ensure ethical data sourcing, and regularly audit AI systems for privacy risks.

The Path Forward for Ethical AI

Balancing AI advancement with ethics is more important than ever. Ensuring data privacy and transparency, businesses Get started and policymakers must take proactive steps.

With the rapid growth of AI capabilities, companies must engage in responsible AI practices. Through Deepfake technology and ethical implications strong ethical frameworks and transparency, AI innovation can align with human values.

Haley Joel Osment Then & Now!

Haley Joel Osment Then & Now! Anthony Michael Hall Then & Now!

Anthony Michael Hall Then & Now! Michael Jordan Then & Now!

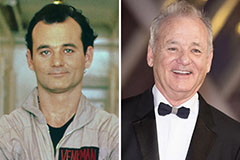

Michael Jordan Then & Now! Bill Murray Then & Now!

Bill Murray Then & Now! Nicholle Tom Then & Now!

Nicholle Tom Then & Now!